Evaluating evidence is a cornerstone of both academic research and practical decision-making. The process involves rigorously examining the credibility, accuracy, and reliability of information before it is utilized to support a hypothesis or inform a conclusion.

This methodical assessment is essential to distill truth from misinformation and to uphold the integrity of conclusions drawn in various fields, from science to the humanities.

Proper evaluation of evidence dictates that one must not only look at the content of the material presented but also scrutinize the context in which it exists, ensuring that it is current, relevant, and applicable to the situation at hand.

The types and sources of evidence vary widely, ranging from empirical data to expert testimony, each with its own merit and application. Recognizing the form that evidence takes is crucial for understanding how it should be weighed and integrated into a broader argument or study.

Employing sound principles of critical appraisal enables individuals to navigate complex information landscapes and to synthesize diverse findings.

This requires an understanding of the concepts and methodologies intrinsic to evidence-based practice, including the ability to read and interpret results effectively and use research resources appropriately.

Key Takeaways

- Scrutinizing evidence for credibility and relevance is critical to forming solid conclusions.

- Understanding different types of evidence is essential for accurate appraisal and application.

- Applying key principles of critical appraisal helps integrate evidence into practice effectively.

The Fundamentals of Evidence Evaluation

Evaluating evidence is an essential step in evidence-based practice. It ensures that information applied in clinical settings or other professional arenas is both relevant and reliable. The process of critical appraisal underpins this evaluation, serving as a methodical approach to assess the quality of research.

In evidence-based practice, practitioners raise pertinent questions about patient care or a specific clinical issue. The next step involves seeking out the best available evidence to answer these questions.

Once obtained, the evidence must be methodically evaluated to determine its applicability and strength.

The evaluation phase consists of several components:

- Validity: Checking if the research results are credible and applicable.

- Relevance: Ensuring the evidence pertains directly to the initial question posed.

- Reliability: Assessing if the study’s findings can be consistently replicated.

An effective evaluation involves a critical appraisal that meticulously examines both the methodology and the outcomes of research studies. Practitioners must consider variables such as study design, sample size, bias, and the robustness of conclusions.

Types of Evidence

In evaluating research, it is essential to understand the different types of evidence. These vary in methodology, rigor, and reliability. Let’s explore the key types of evidence used in evidence-based practice.

Evidence Hierarchy

The hierarchy of evidence guides the evaluation of literature, with different levels ranking the strength and reliability of evidence. At the top, systematic reviews and meta-analyses provide powerful summaries of available evidence. Further down the hierarchy, individual studies offer varying levels of strength, with some being more prone to bias.

Randomized Controlled Trials

Randomized controlled trials (RCTs) are a form of experimental studies, considered the gold standard in evidence due to their methodology. Participants are randomly assigned to a treatment or control group, helping to minimize bias and establish causality between interventions and outcomes.

Systematic Reviews

Systematic reviews synthesize results from multiple studies to draw overarching conclusions. They rigorously assess the literature by including only studies that meet pre-defined criteria, providing a comprehensive overview of the evidence on a specific topic.

Experimental and Quasi-Experimental Studies

Experimental studies employ controlled conditions to understand cause-and-effect relationships. In contrast, quasi-experimental studies lack random assignment but still attempt to identify causal impacts through alternative designs, like time-series analyses or matched groups.

Qualitative Designs and Studies

Qualitative designs guide qualitative studies, which focus on understanding human experiences and behaviors through methods such as interviews and observations. They yield rich, detailed data that can offer insights into the context and meaning of health practices and phenomena.

Mixed Methods Research

Mixed methods research combines elements of both qualitative and quantitative studies. It offers a more nuanced understanding of research questions by integrating numerical data with the depth of qualitative evidence.

Principles of Critical Appraisal

Critical appraisal is a systematic process used to identify the strengths and weaknesses of a research study, assess its relevance, and evaluate the evidence presented. This process is crucial in determining the value of study findings for clinical decision-making and policy formation.

Assessing Research Quality

Researchers establish the quality of a study by looking at various core aspects, such as the study’s design, methodology, and rigor.

High-quality research should have clear objectives, appropriate methodology, and rigorously applied study protocols. Quality assessment tools provide a structured way to evaluate these elements, like the protocol outlined in the Principles of Critical Appraisal from Cochrane.

Analyzing Study Designs

The design of a study significantly affects its outcomes. Prospective cohort studies, for example, can establish temporal relationships, while randomized control trials are the gold standard for establishing causality. Each design type has its own set of standards that, when met, strengthens the study’s plausibility.

Evaluating Outcomes Relevance

The relevance of study outcomes is judged by their effectiveness and applicability to the target demographic.

Evaluators must discern whether the study’s outcomes are not only statistically significant but also clinically significant and directly relevant to the patient population or research question at hand.

Understanding Strength and Impact

The impact of a study is often associated with the magnitude of its effects and the quality of the evidence.

Strong studies have the potential to influence clinical practice and policy decisions, implying that their findings have both solid internal validity and external applicability.

Spotting Flaws and Biases

Identifying potential flaws and biases is a critical component of critical appraisal.

Bias can emerge at various stages of research, from data collection to analysis and interpretation. Recognizing biases, such as selection bias or confirmation bias, is essential to determine the study’s support and overall credibility.

Application in Practice

Incorporating evidence into clinical practice ensures that healthcare decisions are made on the most up-to-date and relevant information. This approach leverages outcomes from evidence-based medicine, integrates personal clinical expertise, and informs policies for public health and organizational levels. Ensuring continuous improvement is pivotal for sustaining high-quality patient care.

Evidence-Based Medicine

Evidence-based medicine (EBM) utilizes current, high-quality research to guide healthcare decisions.

Clinicians apply systematic reviews and randomized control trials to tailor care to individual patients, ensuring interventions are both effective and efficient. This methodology emphasizes the use of the best available evidence while taking into account patient preferences and values.

Clinical Expertise Integration

The integration of clinical expertise involves combining practical experience with academic research.

Healthcare providers assess patient needs and risks by interpreting evidence in the context of their vast clinical experience. This allows them to customize treatments and improve patient outcomes, bridging the gap between theory and practice.

Public Health and Policy

Evidence-based approaches inform public health interventions and policy-making processes.

Authorities review epidemiological studies and health outcomes research to develop guideline recommendations. This ensures that interventions, like vaccination programs or screening guidelines, are effectively implemented at a population level to improve overall health and safety.

Organizational Implementation

Organizations play a crucial role in adopting EBM by fostering an environment that supports and encourages the application of research findings.

Training programs and workshops can help clinicians stay current with advances. Healthcare institutions often establish protocols and resources to translate evidence into practice efficiently.

Strategies for Continuous Improvement

Continuous improvement strategies involve regular review and adaptation of practice guidelines.

Healthcare providers must remain abreast of new evidence and be prepared to adjust their approaches. Audit and feedback mechanisms are useful tools that help organizations gauge the success of implemented strategies and identify areas for improvement.

Reading and Interpreting Results

When one approaches the task of reading and analyzing research results, it is crucial to focus on the appropriateness of outcomes being measured. Scientific rigor and robust methodology should be the cornerstone of any data set being evaluated.

A systematic approach in interpreting results ensures that the conclusions drawn are sound and reliable.

In examining results, one must look for:

- Clear definition and relevance of the outcomes,

- Statistical significance of the findings,

- The impact and applicability of the results in the real world.

Researchers often employ a variety of analytical methods to explore data. Understanding these methods is essential in accurately interpreting the results they produce.

For example, when reviewing a meta-analysis, one must verify the quality of the included studies and their relevance to the review question.

Tabular presentations can streamline complex information, such as showing relative and absolute effects for dichotomous outcome data, which includes measures like the number needed to treat (NNT).

| Measure Type | Description |

|---|---|

| Relative Effect | Compares outcome between groups |

| Absolute Effect | Direct difference in outcome |

| NNT | Number needed to treat for one beneficial outcome |

It is also imperative to assess whether the results have been communicated effectively; for instance, how the conclusions of the review are reported.

The reader must also take into account how the information is presented—whether it facilitates an understanding that leads to sound, evidence-based decisions. One should not overlook the importance of ensuring that results align with the patients’ values and preferences, which is a fundamental aspect of evidence-based medicine.

Utilizing Research Resources

When embarking on a systematic review, researchers prioritize the discovery of high-quality, relevant studies to inform their research questions.

Efficient utilization of research resources is imperative to the success of this process.

Selecting Databases and Journals:

A comprehensive search often begins with databases such as PubMed, which provides access to biomedical literature.

Researchers may further explore specialized databases depending on their field of study.

- Key databases include:

- PubMed for biomedical articles.

- IEEE Xplore for engineering and technology.

- PsycINFO for psychological studies.

Developing Search Strategies:

Crafting detailed search strategies that include relevant keywords and phrases ensures that the collected evidence aligns with the research questions.

Boolean operators—such as AND, OR, and NOT—refine search results effectively.

- Boolean operators:

- AND narrows the search by combining terms.

- OR broadens the search to include any terms.

- NOT excludes specific terms from the search.

Evaluating Resource Quality:

Assessing the credibility and relevance of resources is a critical step.

It is achieved through a critical appraisal of the evidence, considering factors such as methodology, bias, and applicability.

Lateral Reading:

Researchers may engage in lateral reading, a method that compares the credibility of a source by checking it against other resources, to ensure a holistic understanding of the topic.

Setting the Context

Evaluating evidence within its context is critical for determining its applicability to specific research questions, especially when considering the effectiveness, safety, and potential harm of therapies or preventive measures.

When considering the setting and timeframe, researchers must examine how these factors influence the outcomes and relevance of the evidence.

1. Applicability of research:

- Pertinence to the specific environment or population

- Importance of contextual factors such as culture and leadership

2. Effectiveness:

- Evidence should be assessed for its performance under various conditions.

- How outcomes might vary in different settings

3. Safety and harm:

- Consideration of environment’s influence on safety outcomes

- Risk evaluation within the specific context

4. Therapy and prevention:

- Impact of context on the implementation of interventions

- The role of setting in therapeutic and preventive success rates

Researchers often utilize frameworks like the CFIR 20 to comprehensively describe the context and help interpret findings.

Such frameworks bolster the credibility and utility of systematic reviews, particularly when parsing complex interventions that are context-dependent.

The term “context”, coming from the Latin origins of “weaving together,” aptly describes the integration of interventions with specific healthcare environments.

To assess context effectively, the detailing of the setting, cultural nuances, organizational characteristics, and time-related aspects is vital.

These considerations ensure that the evidence aligns with practical scenarios, allowing stakeholders to make informed decisions regarding healthcare implementation.

Building an Evidence-Informed Argument

In developing an evidence-informed argument, one must be meticulous in selecting proof that is credible and directly pertinent to the claim.

The argument should be built upon a foundation of facts and data that supports the central thesis effectively.

Selection of Evidence:

- Quality over Quantity: A few well-substantiated pieces of evidence can be far more convincing than a large quantity of weak facts.

- Relevance: Evidence must be directly related to the argument, providing strong support for the points made.

Analysis and Evaluation:

- Critical Assessment: Each piece of evidence should be scrutinized.

- Questions to consider include: Is the evidence current? Does it come from a reliable source? How does it compare to other available evidence?

- Objectivity: Maintain a neutral, unbiased perspective.

- Evaluate all sides of an argument to ensure a comprehensive understanding.

Synthesis of Information:

- When synthesizing information, one creates an interconnected chain of evidence.

- Each piece logically corroborates the next, creating a cohesive argument.

- Use logical reasoning to show how the evidence supports the argument’s claims, creating clear connections between the data and the thesis.

Presenting Evidence:

- Organize evidence in a logical order, typically starting with the most compelling details.

- Ensure that the evidence is presented clearly and concisely, with a straightforward explanation of how it supports the point being made.

Decisions and choices throughout the process should be deliberate and well-founded.

Scholars must not hesitate to question the validity and reliability of their evidence, as this will only strengthen the final argument.

Emerging Trends in Evidence Evaluation

Evaluating the quality and applicability of evidence in healthcare is an ongoing challenge. In response, there are emerging trends that focus on enhancing the evaluation process.

The healthcare community is witnessing a shift toward a more nuanced approach that prioritizes quality improvement and practical guidance.

One key development is the establishment of frameworks that guide practitioners through the evaluation process.

These are grounded in the core principle that evidence should not only be valid but also ethically gathered and clinically applicable.

Research is moving beyond traditional measures to develop evaluation tools that scrutinize the relevance and contextual appropriateness of evidence.

- Scoping Reviews have emerged as a method to assess the breadth of evidence on a given topic, ensuring the literature is thoroughly surveyed before conclusions are drawn.

- Rapid Evidence Synthesis has become increasingly sought after, generating swift insights to support time-sensitive decision-making.

- The push for transparency has fostered the creation of clear reporting standards, improving the way evidence is communicated and assessed.

Efforts are also being made to standardize the levels of evidence, offering clear-cut tiers to differentiate studies by their methodological quality.

Such standardized systems are starting to have a tangible impact on various stakeholders, including policymakers and researchers.

In the world of published academic literature, tools and indexes that aid in the rapid identification and classification of evidence are being further refined.

These advancements facilitate the integration of research findings into clinical practice, aiming to reduce variation in care and promote evidence-informed decision-making.

Conclusions

The process of drawing conclusions from evidence involves a careful and structured approach to determining what the accumulated information signifies.

This is the stage where the critical appraisal of evidence comes to fruition. Through its diligent application, one can assess whether the evidence is reliable, credible, and useful.

- Reliability of evidence is gauged by examining the consistency of the results across multiple studies or sources.

- Credibility focuses on the trustworthiness of the source, authorship, and the methodological soundness of the evidence.

- Usefulness pertains to the evidence’s applicability to the real-world scenario or question at hand.

When they evaluate evidence, researchers must consider both the strengths and weaknesses discovered during the appraisal phase.

This balanced view helps in forming a justifiable and substantiated conclusion.

The assessment of the evidence’s impact on existing knowledge or practices cannot be overstated. It serves as the bridge between research findings and practical implementation.

Researchers also need to recognize the boundaries of their conclusions.

Evidence rarely answers a question in its entirety, often leading to recommendations for further study or acknowledgment of the evidence’s limitations.

Conclusions should be presented in a manner that reflects the evidence’s depth and breadth, without overstating the case.

By adhering to these principals, the final judgment becomes a tool for informed decision-making, guiding future research, and contributing to the larger field of knowledge.

Frequently Asked Questions

Evaluating evidence is critical in various fields such as academia, psychology, scientific research, and law. This section answers common inquiries regarding assessment techniques and methodologies to ensure evidence is thoroughly and accurately interpreted.

What are the steps to critically assess evidence in an academic essay?

To critically assess evidence in an academic essay, one starts by identifying the source of the information, assessing its credibility, and analyzing the relevance to the topic.

This process includes examining the methods used to generate the evidence, evaluating the results, and considering the potential for bias or other errors.

Can you provide an example to illustrate how to properly evaluate evidence?

An example of evaluating evidence is examining a study on the effects of a new heart medication.

Reviewing the study’s design, sample size, consistency of results, and potential conflicts of interest will provide insight into the reliability of the conclusions.

What methodologies are applied when evaluating evidence within psychological research?

In psychological research, evidence is evaluated using methodologies like peer review, replication studies, meta-analyses, and consistency with established theories.

Researchers may also assess the validity and reliability of the tools used to gather data.

How is evidence appraised and tested in the context of scientific research?

Scientific research appraises and tests evidence through controlled experiments, peer review, and statistical analysis.

Rigorous methods and repeatability are key to determining the reliability of scientific evidence.

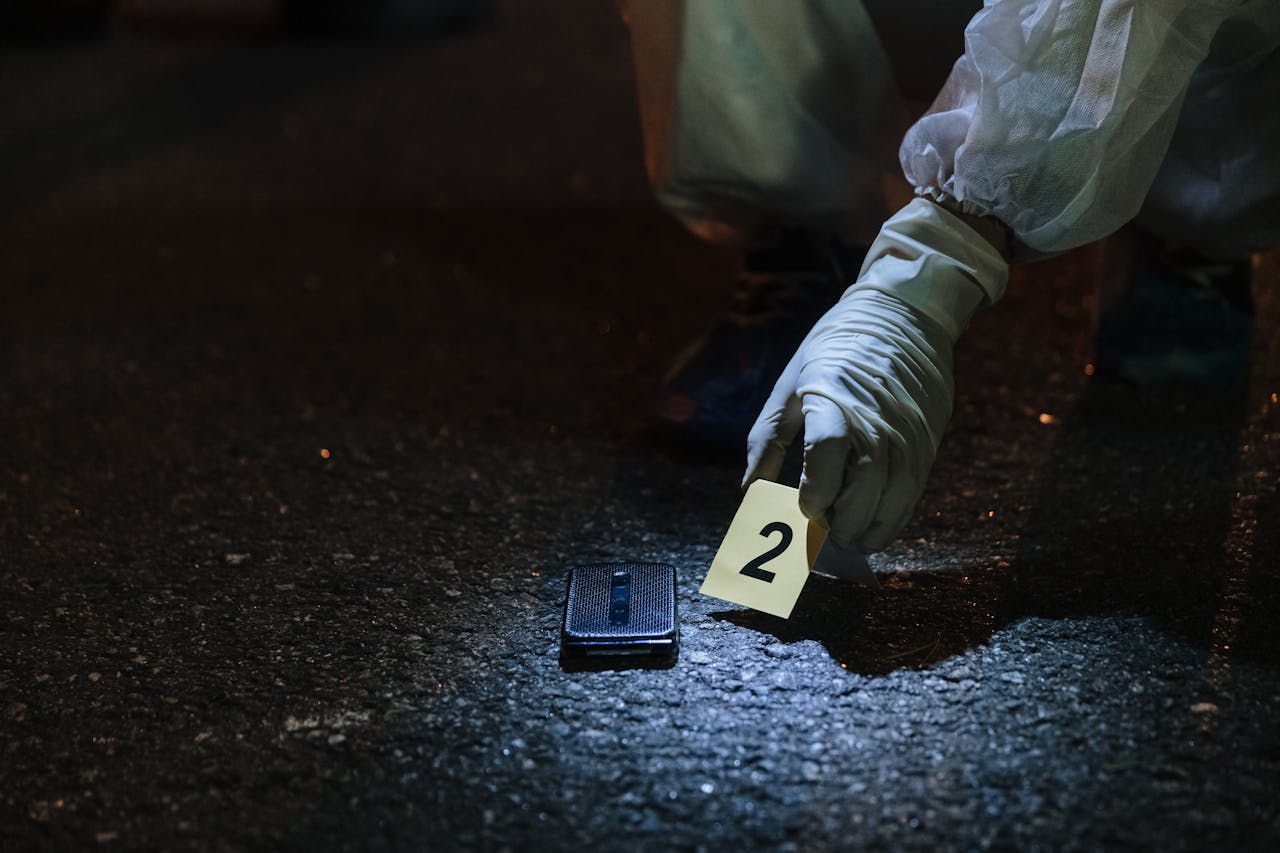

What criteria are used in the legal system to evaluate the validity of evidence in criminal cases?

The legal system uses criteria such as relevance to the case, authenticity, the credibility of the source, and adherence to the rules of evidence — including the exclusion of hearsay and the necessity for evidentiary chain-of-custody.

What approaches are recommended for deconstructing and assessing arguments effectively?

To deconstruct and assess arguments effectively, one should identify the premises and conclusion. Then, scrutinize the logical structure, check for fallacies or biased reasoning, and evaluate the strength of the link between evidence and argument.